Defining AI workflows

AI workflows typically require more than just a model call. They need pre- and post-processing steps like retrieving context, managing session history, reformatting inputs, validating outputs, or combining multiple model responses.

A flow is a special Genkit function that wraps your AI logic to provide:

- Type-safe inputs and outputs: Define schemas using Zod for static and runtime validation

- Streaming support: Stream partial responses or custom data

- Developer UI integration: Test and debug flows with visual traces

- Easy deployment: Deploy as HTTP endpoints to Cloud Functions for Firebase or any platform

- Type-safe inputs and outputs: Define schemas using Go structs for static and runtime validation

- Streaming support: Stream partial responses or custom data

- Developer UI integration: Test and debug flows with visual traces

- Easy deployment: Deploy as HTTP endpoints to any platform

- Type-safe inputs and outputs: Define schemas using Pydantic Models for static and runtime validation

- Streaming support: Stream partial responses or custom data

- Developer UI integration: Test and debug flows with visual traces

- Easy deployment: Deploy as HTTP endpoints to any platform

Flows are lightweight. They’re written like regular functions with minimal abstraction.

Defining and calling flows

Section titled “Defining and calling flows”In its simplest form, a flow just wraps a function. The following example wraps a function that makes a model generation request:

import { googleAI } from '@genkit-ai/google-genai';import { genkit, z } from 'genkit';

const ai = genkit({ plugins: [googleAI()],});

export const menuSuggestionFlow = ai.defineFlow( { name: 'menuSuggestionFlow', inputSchema: z.object({ theme: z.string() }), outputSchema: z.object({ menuItem: z.string() }), }, async ({ theme }) => { const { text } = await ai.generate({ model: googleAI.model('gemini-2.5-flash'), prompt: `Invent a menu item for a ${theme} themed restaurant.`, }); return { menuItem: text }; },);package main

import ( "context"

"github.com/firebase/genkit/go/ai" "github.com/firebase/genkit/go/genkit" "github.com/firebase/genkit/go/plugins/googlegenai")

func main() { ctx := context.Background()

g := genkit.Init(ctx, genkit.WithPlugins(&googlegenai.GoogleAI{}), )

type MenuSuggestionInput struct { Theme string `json:"theme"` }

type MenuSuggestionOutput struct { MenuItem string `json:"menuItem"` }

menuSuggestionFlow := genkit.DefineFlow(g, "menuSuggestionFlow", func(ctx context.Context, input MenuSuggestionInput) (MenuSuggestionOutput, error) { resp, err := genkit.Generate(ctx, g, ai.WithPrompt("Invent a menu item for a %s themed restaurant.", input.Theme), ) if err != nil { return MenuSuggestionOutput{}, err }

return MenuSuggestionOutput{MenuItem: resp.Text()}, nil })}from genkit.ai import Genkitfrom genkit.plugins.google_genai import GoogleAIfrom pydantic import BaseModel, Field

ai = Genkit( plugins=[GoogleAI()],)

class MenuSuggestionInput(BaseModel): theme: str = Field(description='Restaurant theme')

class MenuSuggestionOutput(BaseModel): menu_item: str = Field(description='Generated menu item')

@ai.flow()async def menu_suggestion_flow(input: MenuSuggestionInput) -> MenuSuggestionOutput: response = await ai.generate( prompt=f'Invent a menu item for a {input.theme} themed restaurant.', ) return MenuSuggestionOutput(menu_item=response.text)Just by wrapping your generate calls like this, you add some functionality: doing so lets you run the flow from the Genkit CLI and from the developer UI, and is a requirement for several of Genkit’s features, including deployment and observability (later sections discuss these topics).

Input and output schemas

Section titled “Input and output schemas”One of the most important advantages Genkit flows have over directly calling a model API is type safety of both inputs and outputs. When defining flows, you can define schemas for them.

You can define schemas using Zod, in much the same way as you define the

output schema of a generate() call; however, unlike with generate(), you can

also specify an input schema.

While it’s not mandatory to wrap your input and output schemas in z.object(), it’s considered best practice for these reasons:

- Better developer experience: Wrapping schemas in objects provides a better experience in the Developer UI by giving you labeled input fields.

- Future-proof API design: Object-based schemas allow for easy extensibility in the future. You can add new fields to your input or output schemas without breaking existing clients, which is a core principle of robust API design.

All examples in this documentation use object-based schemas to follow these best practices.

You can define schemas using Go structs with JSON tags. While you can use primitive types directly as input and output parameters, it’s considered best practice to use struct-based schemas for these reasons:

- Better developer experience: Struct-based schemas provide a better experience in the Developer UI by giving you labeled input fields.

- Future-proof API design: Struct-based schemas allow for easy extensibility in the future. You can add new fields to your input or output schemas without breaking existing clients, which is a core principle of robust API design.

All examples in this documentation use struct-based schemas to follow these best practices.

You can define schemas using Pydantic models. While you can use primitive types directly as input and output parameters, it’s considered best practice to use Pydantic model-based schemas for these reasons:

- Better developer experience: Model-based schemas provide a better experience in the Developer UI by giving you labeled input fields.

- Future-proof API design: Model-based schemas allow for easy extensibility in the future. You can add new fields to your input or output schemas without breaking existing clients, which is a core principle of robust API design.

All examples in this documentation use Pydantic model-based schemas to follow these best practices.

Here’s a refinement of the last example, which defines a flow that takes a string as input and outputs an object:

import { z } from 'genkit';

const MenuItemSchema = z.object({ dishname: z.string(), description: z.string(),});

export const menuSuggestionFlowWithSchema = ai.defineFlow( { name: 'menuSuggestionFlow', inputSchema: z.object({ theme: z.string() }), outputSchema: MenuItemSchema, }, async ({ theme }) => { const { output } = await ai.generate({ model: googleAI.model('gemini-2.5-flash'), prompt: `Invent a menu item for a ${theme} themed restaurant.`, output: { schema: MenuItemSchema }, }); if (output == null) { throw new Error("Response doesn't satisfy schema."); } return output; },);type MenuSuggestionInput struct { Theme string `json:"theme"`}

type MenuItem struct { Name string `json:"name"` Description string `json:"description"`}

menuSuggestionFlow := genkit.DefineFlow(g, "menuSuggestionFlow", func(ctx context.Context, input MenuSuggestionInput) (MenuItem, error) { item, _, err := genkit.GenerateData[MenuItem](ctx, g, ai.WithPrompt("Invent a menu item for a %s themed restaurant.", input.Theme), ) return item, err })from pydantic import BaseModel, Field

class MenuSuggestionInput(BaseModel): theme: str = Field(description='Restaurant theme')

class MenuItemSchema(BaseModel): dishname: str = Field(description='Name of the dish') description: str = Field(description='Description of the dish')

@ai.flow()async def menu_suggestion_flow(input: MenuSuggestionInput) -> MenuItemSchema: response = await ai.generate( prompt=f'Invent a menu item for a {input.theme} themed restaurant.', output_schema=MenuItemSchema, ) return response.outputNote that the schema of a flow does not necessarily have to line up with the schema of the model generation calls within the flow (in fact, a flow might not even contain model calls). Here’s a variation of the example that uses the structured output to format a simple string, which the flow returns.

export const menuSuggestionFlowMarkdown = ai.defineFlow( { name: 'menuSuggestionFlow', inputSchema: z.object({ theme: z.string() }), outputSchema: z.object({ formattedMenuItem: z.string() }), }, async ({ theme }) => { const { output } = await ai.generate({ model: googleAI.model('gemini-2.5-flash'), prompt: `Invent a menu item for a ${theme} themed restaurant.`, output: { schema: MenuItemSchema }, }); if (output == null) { throw new Error("Response doesn't satisfy schema."); } return { formattedMenuItem: `**${output.dishname}**: ${output.description}`, }; },);Note how we pass MenuItem as a type parameter; this is the equivalent of passing the

WithOutputType() option and getting a value of that type in response.

type MenuSuggestionInput struct { Theme string `json:"theme"`}

type MenuItem struct { Name string `json:"name"` Description string `json:"description"`}

type FormattedMenuOutput struct { FormattedMenuItem string `json:"formattedMenuItem"`}

menuSuggestionMarkdownFlow := genkit.DefineFlow(g, "menuSuggestionMarkdownFlow", func(ctx context.Context, input MenuSuggestionInput) (FormattedMenuOutput, error) { item, _, err := genkit.GenerateData[MenuItem](ctx, g, ai.WithPrompt("Invent a menu item for a %s themed restaurant.", input.Theme), ) if err != nil { return FormattedMenuOutput{}, err }

return FormattedMenuOutput{ FormattedMenuItem: fmt.Sprintf("**%s**: %s", item.Name, item.Description), }, nil })from pydantic import BaseModel, Field

class MenuSuggestionInput(BaseModel): theme: str = Field(description='Restaurant theme')

class MenuItemSchema(BaseModel): dishname: str = Field(description='Name of the dish') description: str = Field(description='Description of the dish')

class FormattedMenuOutput(BaseModel): formatted_menu_item: str = Field(description='Formatted menu item in markdown')

@ai.flow()async def menu_suggestion_flow(input: MenuSuggestionInput) -> FormattedMenuOutput: response = await ai.generate( prompt=f'Invent a menu item for a {input.theme} themed restaurant.', output_schema=MenuItemSchema, ) output: MenuItemSchema = response.output return FormattedMenuOutput( formatted_menu_item=f'**{output.dishname}**: {output.description}' )Calling flows

Section titled “Calling flows”Once you’ve defined a flow, you can call it from your code:

const { text } = await menuSuggestionFlow({ theme: 'bistro' });output, err := menuSuggestionFlow.Run(context.Background(), MenuSuggestionInput{Theme: "bistro"})response = await menu_suggestion_flow(MenuSuggestionInput(theme='bistro'))The argument to the flow must conform to the input schema.

If you defined an output schema, the flow response will conform to it. For

example, if you set the output schema to MenuItemSchema, the flow output will

contain its properties:

const { dishname, description } = await menuSuggestionFlowWithSchema({ theme: 'bistro' });item, err := menuSuggestionFlow.Run(context.Background(), MenuSuggestionInput{Theme: "bistro"})if err != nil { log.Fatal(err)}

log.Println(item.Name)log.Println(item.Description)Streaming flows

Section titled “Streaming flows”Flows support streaming using an interface similar to the model generation streaming interface. Streaming is useful when your flow generates a large amount of output, because you can present the output to the user as it’s being generated, which improves the perceived responsiveness of your app. As a familiar example, chat-based LLM interfaces often stream their responses to the user as they are generated.

Here’s an example of a flow that supports streaming:

export const menuSuggestionStreamingFlow = ai.defineFlow( { name: 'menuSuggestionFlow', inputSchema: z.object({ theme: z.string() }), streamSchema: z.string(), outputSchema: z.object({ theme: z.string(), menuItem: z.string() }), }, async ({ theme }, { sendChunk }) => { const { stream, response } = ai.generateStream({ model: googleAI.model('gemini-2.5-flash'), prompt: `Invent a menu item for a ${theme} themed restaurant.`, });

for await (const chunk of stream) { // Here, you could process the chunk in some way before sending it to // the output stream via sendChunk(). In this example, we output // the text of the chunk, unmodified. sendChunk(chunk.text); }

const { text: menuItem } = await response;

return { theme, menuItem, }; },);- The

streamSchemaoption specifies the type of values your flow streams. This does not necessarily need to be the same type as theoutputSchema, which is the type of the flow’s complete output. - The second parameter to your flow definition is called

sideChannel. It provides features such as request context and thesendChunkcallback. ThesendChunkcallback takes a single parameter, of the type specified bystreamSchema. Whenever data becomes available within your flow, send the data to the output stream by calling this function.

In the above example, the values streamed by the flow are directly coupled to the values streamed by the model generation call inside the flow. Although this is often the case, it doesn’t have to be: you can output values to the stream using the callback as often as is useful for your flow.

Using iterator-based streaming

Section titled “Using iterator-based streaming”The recommended approach for streaming within flows is to use genkit.GenerateStream()

or genkit.GenerateDataStream[T](), which return iterators that integrate naturally

with Go’s range syntax:

type MenuItem struct { Name string `json:"name"` Description string `json:"description"`}

menuSuggestionFlow := genkit.DefineStreamingFlow(g, "menuSuggestionFlow", func(ctx context.Context, theme string, sendChunk core.StreamCallback[string]) (string, error) { stream := genkit.GenerateStream(ctx, g, ai.WithPrompt("Invent a menu item for a %s themed restaurant.", theme), )

for result, err := range stream { if err != nil { return "", err } if result.Done { return result.Response.Text(), nil } // Pass each chunk to the flow's output stream sendChunk(ctx, result.Chunk.Text()) }

return "", nil })For structured output with strong typing, use genkit.GenerateDataStream[T]():

type MenuItem struct { Name string `json:"name"` Description string `json:"description"`}

menuSuggestionFlow := genkit.DefineStreamingFlow(g, "menuSuggestionFlow", func(ctx context.Context, theme string, sendChunk core.StreamCallback[*MenuItem]) (*MenuItem, error) { stream := genkit.GenerateDataStream[*MenuItem](ctx, g, ai.WithPrompt("Invent a menu item for a %s themed restaurant.", theme), )

for result, err := range stream { if err != nil { return nil, err } if result.Done { // result.Output is strongly typed as *MenuItem return result.Output, nil } // result.Chunk is also strongly typed as *MenuItem sendChunk(ctx, result.Chunk) }

return nil, nil })The iterator pattern makes the streaming logic clear and linear, avoiding nested callbacks and making error handling straightforward with Go’s standard patterns.

Using callback-based streaming

Section titled “Using callback-based streaming”Alternatively, you can use the callback-based approach with ai.WithStreaming().

This is useful when you need to combine streaming with other Generate options

or when integrating with existing callback-based code:

type MenuSuggestionInput struct { Theme string `json:"theme"`}

type Menu struct { Theme string `json:"theme"` Items []MenuItem `json:"items"`}

type MenuItem struct { Name string `json:"name"` Description string `json:"description"`}

menuSuggestionFlow := genkit.DefineStreamingFlow(g, "menuSuggestionFlow", func(ctx context.Context, input MenuSuggestionInput, callback core.StreamCallback[string]) (Menu, error) { item, _, err := genkit.GenerateData[MenuItem](ctx, g, ai.WithPrompt("Invent a menu item for a %s themed restaurant.", input.Theme), ai.WithStreaming(func(ctx context.Context, chunk *ai.ModelResponseChunk) error { // Process the chunk and send it to the flow's output stream return callback(ctx, chunk.Text()) }), ) if err != nil { return Menu{}, err }

return Menu{ Theme: input.Theme, Items: []MenuItem{item}, }, nil })The string type in StreamCallback[string] specifies the type of

values your flow streams. This does not necessarily need to be the same

type as the return type, which is the type of the flow’s complete output

(Menu in this example).

In both examples, the values streamed by the flow are directly coupled to the values streamed by the generation call inside the flow. Although this is often the case, it doesn’t have to be: you can output values to the stream using the callback as often as is useful for your flow.

from pydantic import BaseModel, Field

class MenuSuggestionInput(BaseModel): theme: str = Field(description='Restaurant theme')

class MenuOutput(BaseModel): theme: str = Field(description='Restaurant theme') menu_item: str = Field(description='Generated menu item')

@ai.flow()async def menu_suggestion_flow(input: MenuSuggestionInput, ctx) -> MenuOutput: stream, response = ai.generate_stream( prompt=f'Invent a menu item for a {input.theme} themed restaurant.', )

async for chunk in stream: ctx.send_chunk(chunk.text)

return MenuOutput( theme=input.theme, menu_item=(await response).text )The second parameter to your flow definition is called “side channel”. It

provides features such as request context and the send_chunk callback.

The send_chunk callback takes a single parameter. Whenever data becomes

available within your flow, send the data to the output stream by calling

this function.

In the above example, the values streamed by the flow are directly coupled to

the values streamed by the generate_stream() call inside the flow. Although this is

often the case, it doesn’t have to be: you can output values to the stream using

the callback as often as is useful for your flow.

Calling streaming flows

Section titled “Calling streaming flows”Streaming flows are also callable, but they immediately return a response object rather than a promise:

const response = menuSuggestionStreamingFlow.stream({ theme: 'Danube' });The response object has a stream property, which you can use to iterate over the streaming output of the flow as it’s generated:

for await (const chunk of response.stream) { console.log('chunk', chunk);}You can also get the complete output of the flow, as you can with a non-streaming flow:

const output = await response.output;Note that the streaming output of a flow might not be the same type as the

complete output; the streaming output conforms to streamSchema, whereas the

complete output conforms to outputSchema.

Streaming flows can be run like non-streaming flows with

menuSuggestionFlow.Run(ctx, MenuSuggestionInput{Theme: "bistro"}) or they can be streamed:

streamCh, err := menuSuggestionFlow.Stream(context.Background(), MenuSuggestionInput{Theme: "bistro"})if err != nil { log.Fatal(err)}

for result := range streamCh { if result.Err != nil { log.Fatalf("Stream error: %v", result.Err) } if result.Done { log.Printf("Menu with %s theme:\n", result.Output.Theme) for _, item := range result.Output.Items { log.Printf(" - %s: %s", item.Name, item.Description) } } else { log.Println("Stream chunk:", result.Stream) }}Streaming flows are also callable, but they immediately return a response object

rather than a promise. Flow’s stream method returns the stream async iterable,

which you can iterate over the streaming output of the flow as it’s generated.

stream, response = menu_suggestion_flow.stream(MenuSuggestionInput(theme='bistro'))async for chunk in stream: print(chunk)You can also get the complete output of the flow, as you can with a

non-streaming flow. The final response is a future that you can await on.

print(await response)Note that the streaming output of a flow might not be the same type as the complete output.

Running flows from the command line

Section titled “Running flows from the command line”You can run flows from the command line using the Genkit CLI tool:

genkit flow:run menuSuggestionFlow '{"theme": "French"}'genkit flow:run menu_suggestion_flow '{"theme": "French"}'For streaming flows, you can print the streaming output to the console by adding

the -s flag:

genkit flow:run menuSuggestionFlow '{"theme": "French"}' -sgenkit flow:run menu_suggestion_flow '{"theme": "French"}' -sRunning a flow from the command line is useful for testing a flow, or for running flows that perform tasks needed on an ad hoc basis—for example, to run a flow that ingests a document into your vector database.

Debugging flows

Section titled “Debugging flows”One of the advantages of encapsulating AI logic within a flow is that you can test and debug the flow independently from your app using the Genkit developer UI.

The developer UI relies on the Go app continuing to run, even if the logic has

completed. If you are just getting started and Genkit is not part of a broader

app, add select {} as the last line of main() to prevent the app from

shutting down so that you can inspect it in the UI.

To start the developer UI, run the following command from your project directory:

genkit start -- tsx --watch src/your-code.tsgenkit start -- go run .genkit start -- python app.pyUpdate python app.py to match the way you normally run your app.

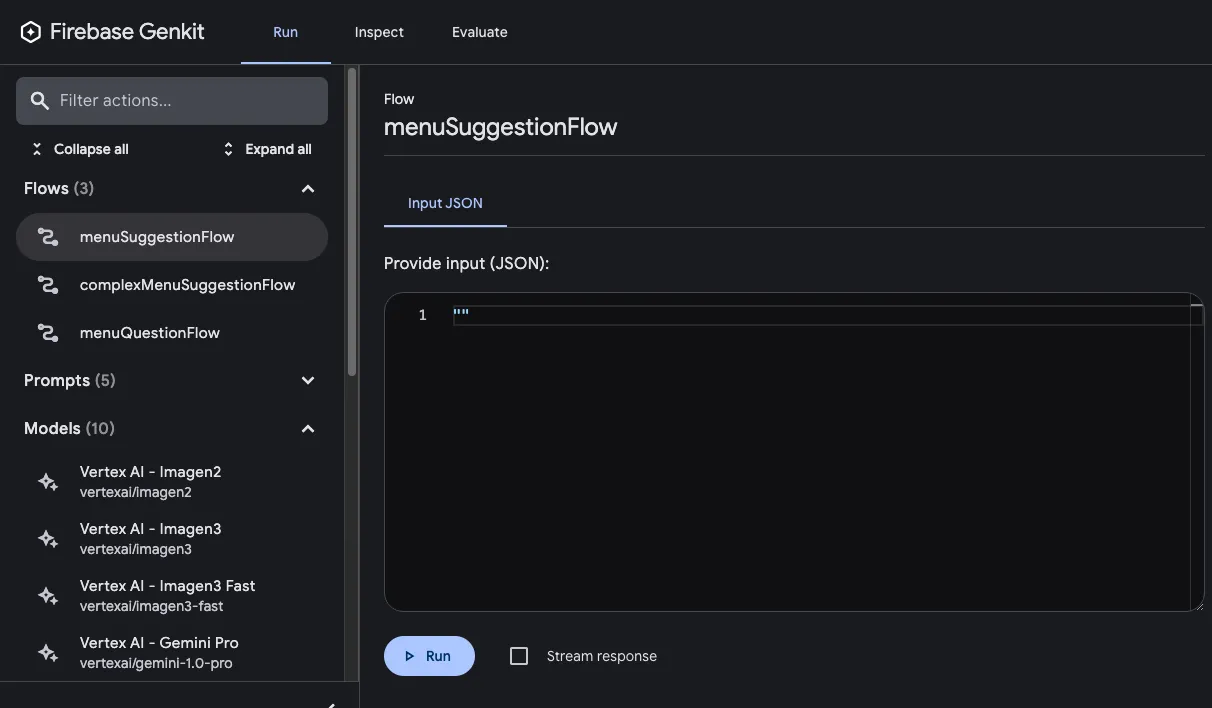

From the Run tab of developer UI, you can run any of the flows defined in your project:

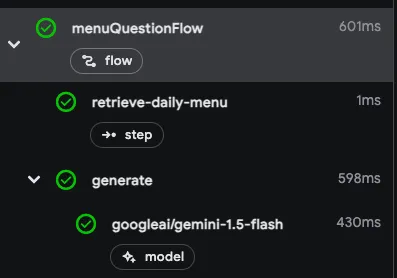

After you’ve run a flow, you can inspect a trace of the flow invocation by either clicking View trace or looking on the Inspect tab.

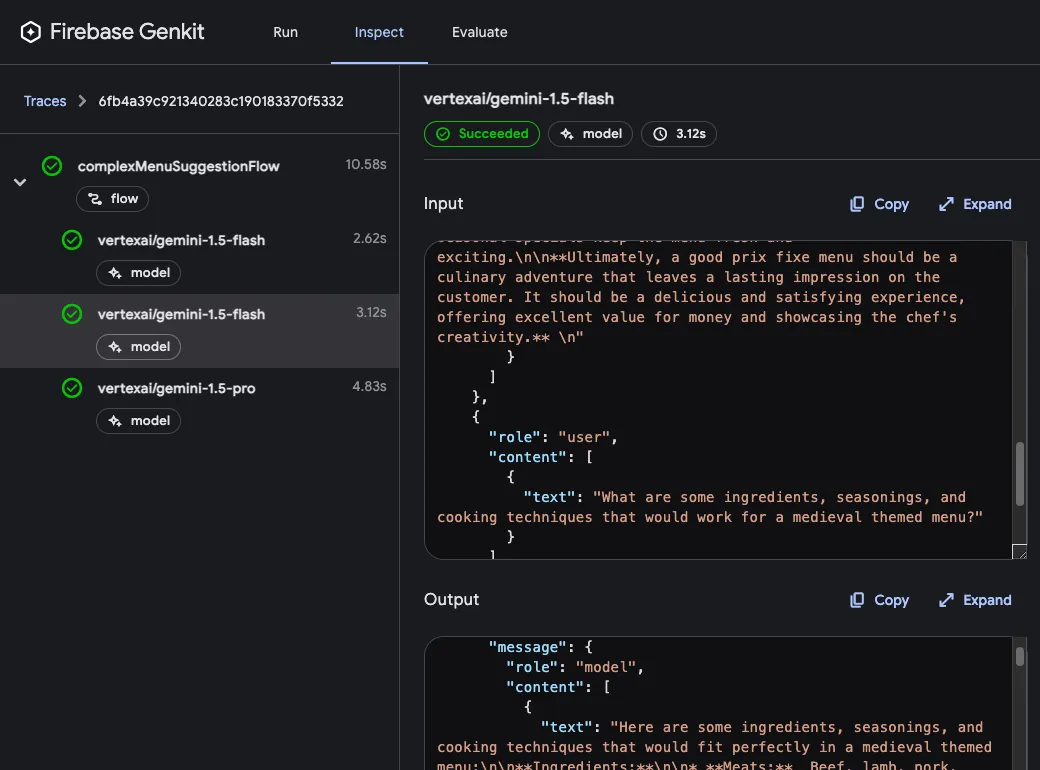

In the trace viewer, you can see details about the execution of the entire flow, as well as details for each of the individual steps within the flow. For example, consider the following flow, which contains several generation requests:

const PrixFixeMenuSchema = z.object({ starter: z.string(), soup: z.string(), main: z.string(), dessert: z.string(),});

export const complexMenuSuggestionFlow = ai.defineFlow( { name: 'complexMenuSuggestionFlow', inputSchema: z.object({ theme: z.string() }), outputSchema: PrixFixeMenuSchema, }, async ({ theme }): Promise<z.infer<typeof PrixFixeMenuSchema>> => { const chat = ai.chat({ model: googleAI.model('gemini-2.5-flash') }); await chat.send('What makes a good prix fixe menu?'); await chat.send( 'What are some ingredients, seasonings, and cooking techniques that ' + `would work for a ${theme} themed menu?`, ); const { output } = await chat.send({ prompt: `Based on our discussion, invent a prix fixe menu for a ${theme} ` + 'themed restaurant.', output: { schema: PrixFixeMenuSchema, }, }); if (!output) { throw new Error('No data generated.'); } return output; },);When you run this flow, the trace viewer shows you details about each generation request including its output:

Flow steps

Section titled “Flow steps”In the last example, you saw that each generate() call showed up as a separate

step in the trace viewer. Each of Genkit’s fundamental actions show up as

separate steps of a flow:

generate()Chat.send()embed()index()retrieve()

If you want to include code other than the above in your traces, you can do so

by wrapping the code in a run() call. You might do this for calls to

third-party libraries that are not Genkit-aware, or for any critical section of

code.

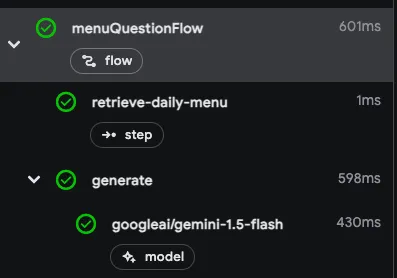

For example, here’s a flow with two steps: the first step retrieves a menu using

some unspecified method, and the second step includes the menu as context for a

generate() call.

export const menuQuestionFlow = ai.defineFlow( { name: 'menuQuestionFlow', inputSchema: z.object({ question: z.string() }), outputSchema: z.object({ answer: z.string() }), }, async ({ question }): Promise<{ answer: string }> => { const menu = await ai.run('retrieve-daily-menu', async (): Promise<string> => { // Retrieve today's menu. (This could be a database access or simply // fetching the menu from your website.)

// ...

return menu; }); const { text } = await ai.generate({ model: googleAI.model('gemini-2.5-flash'), system: "Help the user answer questions about today's menu.", prompt: question, docs: [{ content: [{ text: menu }] }], }); return { answer: text }; },);Because the retrieval step is wrapped in a run() call, it’s included as a step

in the trace viewer:

After you’ve run a flow, you can inspect a trace of the flow invocation by either clicking View trace or looking at the Inspect tab.

Flow steps

Section titled “Flow steps”Each of Genkit’s fundamental actions show up as separate steps in the trace viewer:

genkit.Generate()genkit.Embed()genkit.Retrieve()

If you want to include code other than the above in your traces, you can do so

by wrapping the code in a genkit.Run() call. You might do this for calls to

third-party libraries that are not Genkit-aware, or for any critical section of

code.

For example, here’s a flow with two steps: the first step retrieves a menu using

some unspecified method, and the second step includes the menu as context for a

genkit.Generate() call.

type MenuQuestionInput struct { Question string `json:"question"`}

type MenuQuestionOutput struct { Answer string `json:"answer"`}

menuQuestionFlow := genkit.DefineFlow(g, "menuQuestionFlow", func(ctx context.Context, input MenuQuestionInput) (MenuQuestionOutput, error) { menu, err := genkit.Run(ctx, "retrieve-daily-menu", func() (string, error) { // Retrieve today's menu. (This could be a database access or simply // fetching the menu from your website.)

// ...

return menu, nil }) if err != nil { return MenuQuestionOutput{}, err }

resp, err := genkit.Generate(ctx, g, ai.WithPrompt(input.Question), ai.WithSystem("Help the user answer questions about today's menu."), ai.WithDocs(ai.NewTextPart(menu)), ) if err != nil { return MenuQuestionOutput{}, err }

return MenuQuestionOutput{Answer: resp.Text()}, nil })Because the retrieval step is wrapped in a genkit.Run() call, it’s included as a step

in the trace viewer:

After you’ve run a flow, you can inspect a trace of the flow invocation by either clicking View trace or looking on the Inspect tab.

In the trace viewer, you can see details about the execution of the entire flow, as well as details for each of the individual steps within the flow.

Deploying flows

Section titled “Deploying flows”You can deploy your flows directly as web API endpoints, ready for you to call from your app clients. Deployment is discussed in detail on several other pages, but this section gives brief overviews of your deployment options.

Cloud Functions for Firebase

Section titled “Cloud Functions for Firebase”To deploy flows with Cloud Functions for Firebase, use the onCallGenkit

feature of firebase-functions/https. onCallGenkit wraps your flow in a

callable function. You may set an auth policy and configure App Check.

import { hasClaim, onCallGenkit } from 'firebase-functions/https';import { defineSecret } from 'firebase-functions/params';

const apiKey = defineSecret('GOOGLE_AI_API_KEY');

const menuSuggestionFlow = ai.defineFlow( { name: 'menuSuggestionFlow', inputSchema: z.object({ theme: z.string() }), outputSchema: z.object({ menuItem: z.string() }), }, async ({ theme }) => { // ... return { menuItem: 'Generated menu item would go here' }; },);

export const menuSuggestion = onCallGenkit( { secrets: [apiKey], authPolicy: hasClaim('email_verified'), }, menuSuggestionFlow,);Express.js

Section titled “Express.js”To deploy flows using any Node.js hosting platform, such as Cloud Run, define

your flows using defineFlow() and then call startFlowServer():

import { startFlowServer } from '@genkit-ai/express';

export const menuSuggestionFlow = ai.defineFlow( { name: 'menuSuggestionFlow', inputSchema: z.object({ theme: z.string() }), outputSchema: z.object({ result: z.string() }), }, async ({ theme }) => { // ... },);

startFlowServer({ flows: [menuSuggestionFlow],});By default, startFlowServer will serve all the flows defined in your codebase

as HTTP endpoints (for example, http://localhost:3400/menuSuggestionFlow).

If needed, you can customize the flows server to serve a specific list of flows, as shown below. You can also specify a custom port (it will use the PORT environment variable if set) or specify CORS settings.

export const flowA = ai.defineFlow( { name: 'flowA', inputSchema: z.object({ subject: z.string() }), outputSchema: z.object({ response: z.string() }), }, async ({ subject }) => { // ... return { response: 'Generated response would go here' }; },);

export const flowB = ai.defineFlow( { name: 'flowB', inputSchema: z.object({ subject: z.string() }), outputSchema: z.object({ response: z.string() }), }, async ({ subject }) => { // ... return { response: 'Generated response would go here' }; },);

startFlowServer({ flows: [flowB], port: 4567, cors: { origin: '*', },});net/http Server

Section titled “net/http Server”To deploy a flow using any Go hosting platform, such as Cloud Run, define

your flow using genkit.DefineFlow() and start a net/http server with the

provided flow handler using genkit.Handler():

package main

import ( "context" "log" "net/http"

"github.com/firebase/genkit/go/ai" "github.com/firebase/genkit/go/genkit" "github.com/firebase/genkit/go/plugins/googlegenai" "github.com/firebase/genkit/go/plugins/server")

type MenuSuggestionInput struct { Theme string `json:"theme"`}

type MenuItem struct { Name string `json:"name"` Description string `json:"description"`}

func main() { ctx := context.Background()

g := genkit.Init(ctx, genkit.WithPlugins(&googlegenai.GoogleAI{}))

menuSuggestionFlow := genkit.DefineFlow(g, "menuSuggestionFlow", func(ctx context.Context, input MenuSuggestionInput) (MenuItem, error) { item, _, err := genkit.GenerateData[MenuItem](ctx, g, ai.WithPrompt("Invent a menu item for a %s themed restaurant.", input.Theme), ) return item, err })

mux := http.NewServeMux() mux.HandleFunc("POST /menuSuggestionFlow", genkit.Handler(menuSuggestionFlow)) log.Fatal(server.Start(ctx, "127.0.0.1:3400", mux))}server.Start() is an optional helper function that starts the server and

manages its lifecycle, including capturing interrupt signals to ease local

development, but you may use your own method.

To serve all the flows defined in your codebase, you can use

genkit.ListFlows():

mux := http.NewServeMux()for _, flow := range genkit.ListFlows(g) { mux.HandleFunc("POST /"+flow.Name(), genkit.Handler(flow))}log.Fatal(server.Start(ctx, "127.0.0.1:3400", mux))Other server frameworks

Section titled “Other server frameworks”You can also use other server frameworks to deploy your flows. For example, you can use Gin with just a few lines:

router := gin.Default()for _, flow := range genkit.ListFlows(g) { router.POST("/"+flow.Name(), func(c *gin.Context) { genkit.Handler(flow)(c.Writer, c.Request) })}log.Fatal(router.Run(":3400"))To deploy flows using Flask, you can use the genkit_flask_handler decorator to expose your flows as HTTP endpoints:

import osfrom flask import Flaskfrom genkit.ai import Genkitfrom genkit.plugins.flask import genkit_flask_handlerfrom genkit.plugins.google_genai import GoogleAIfrom pydantic import BaseModel, Field

# Initialize Genkitai = Genkit( plugins=[GoogleAI()], model='googleai/gemini-2.5-flash',)

# Create Flask appapp = Flask(__name__)

class MenuSuggestionInput(BaseModel): theme: str = Field(description='Restaurant theme')

class MenuSuggestionOutput(BaseModel): menu_item: str = Field(description='Generated menu item')

@app.post('/menuSuggestionFlow')@genkit_flask_handler(ai)@ai.flow()async def menu_suggestion_flow(input: MenuSuggestionInput, ctx) -> MenuSuggestionOutput: response = await ai.generate( prompt=f'Invent a menu item for a {input.theme} themed restaurant.', on_chunk=ctx.send_chunk, ) return MenuSuggestionOutput(menu_item=response.text)

if __name__ == '__main__': app.run( host='0.0.0.0', port=int(os.environ.get('PORT', 3400)), debug=False )The genkit_flask_handler decorator automatically handles:

- Request parsing and validation

- Streaming responses via Server-Sent Events

- Error handling and response formatting

- Integration with Genkit’s flow system

For production deployment, you can use a WSGI server like Gunicorn:

gunicorn --bind 0.0.0.0:3400 --workers 4 main:appCalling deployed flows

Section titled “Calling deployed flows”Once your flow is deployed, you can call it with a POST request:

curl -X POST "http://localhost:3400/menuSuggestionFlow" \ -H "Content-Type: application/json" -d '{"data": {"theme": "banana"}}'For streaming responses, you can add the Accept: text/event-stream header:

curl -X POST "http://localhost:3400/menuSuggestionFlow" \ -H "Content-Type: application/json" \ -H "Accept: text/event-stream" \ -d '{"data": {"theme": "banana"}}'You can also use the Genkit web client library to call flows from web applications. See Accessing flows from the client for detailed examples of using the runFlow() and streamFlow() functions.

Learn more about deployment

Section titled “Learn more about deployment”For detailed deployment instructions and platform-specific guides, see: